Role

Product Design Intern

Tools/Skills

Figma, Motion Graphics

Team

2 Product Designers, 1 PM & 2 Developers

Duration

8 Weeks, Summer 2025

Rethinking digital connections

Gen Z has more ways than ever to meet people online, yet many feel their connections lack depth. Most social and dating apps focus on appearances and quick interactions, leaving little room for users to express who they truly are. At Pair AI, we set out to rethink how people form digital connections.

OBJECTIVES

Our overarching goal

This was a highly collaborative process, as I navigated the AI space with 2 other developers and designers. Our goal was to rapidly create an MVP for this concept, with my role entailing the following…

MOODBOARDING

Understanding the space

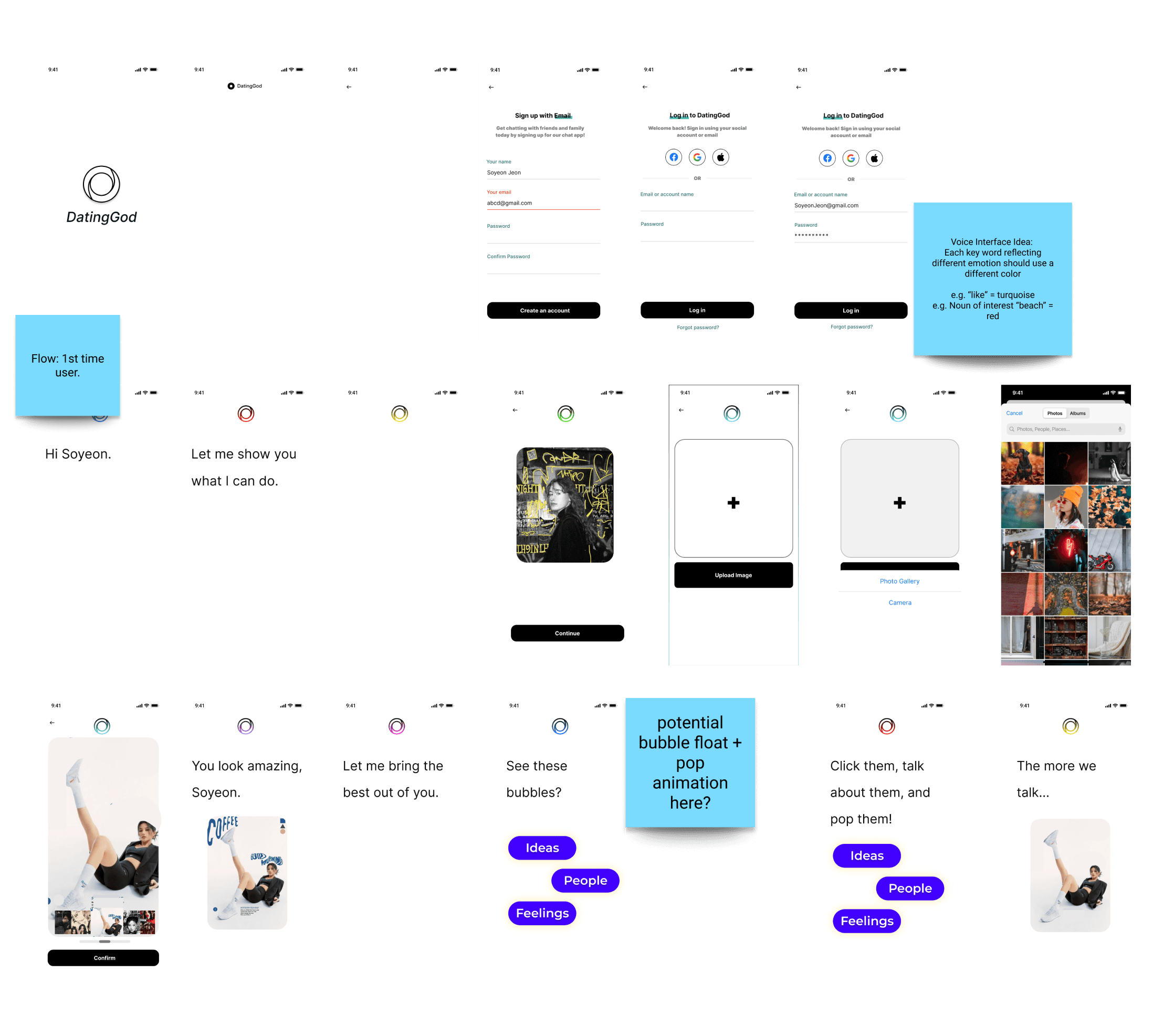

Rapid prototyping

Iterating through prototypes, we created initial versions of how a user might navigate their way through a conversation and obtain personalized collages based on the interaction.

Decoding conversation patterns

EXPERIMENTATION

Experimenting with motion

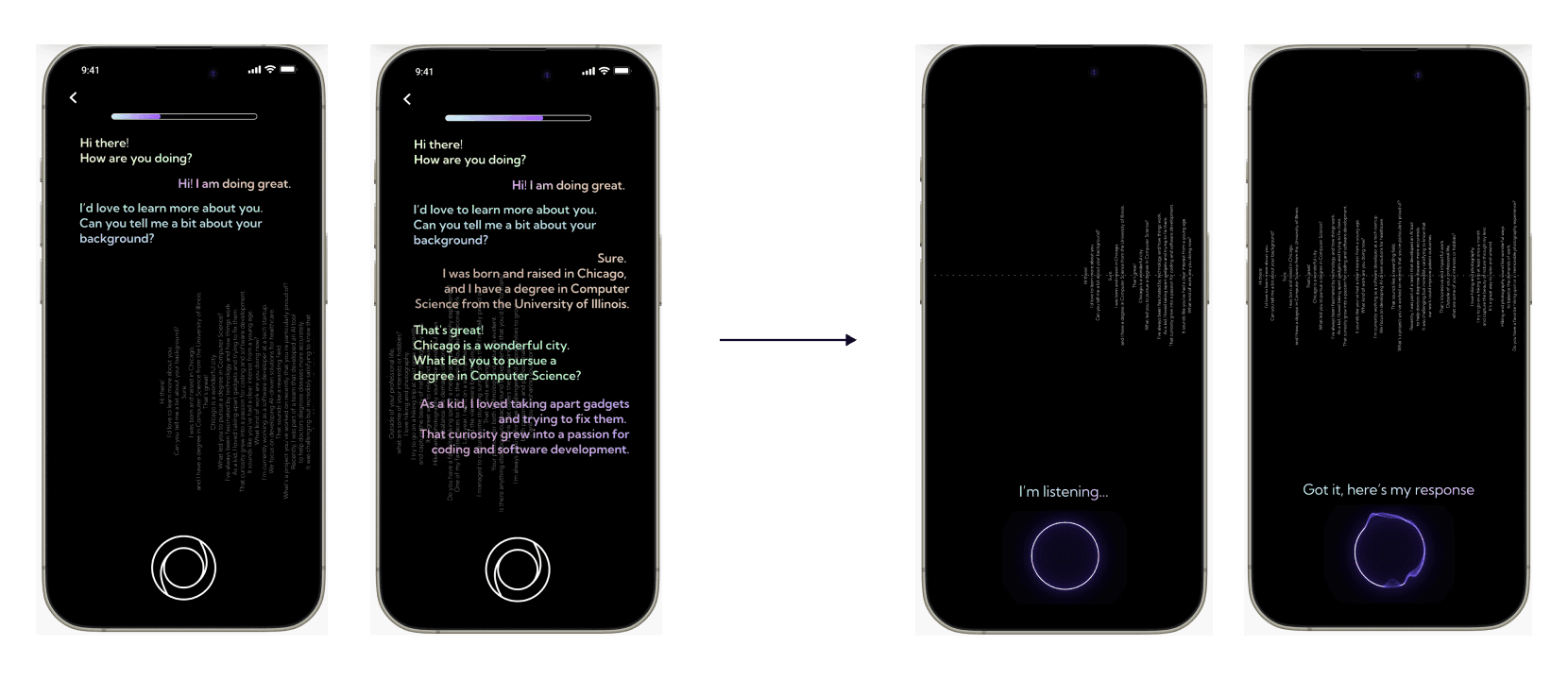

Iterating through prototypes

Beyond the motion visuals, I also had to consider how text appeared on screen and what types of feedback users needed throughout the conversation, another process that required extensive iteration and testing.

COLLAGES

Creating the collages

After each conversation, the AI generates a personalized personality collage. To make this dynamic to each input, I collaborated closely with engineers to define how text and elements would populate the template, developing a coordinate-based visual system they could easily implement as shown below.

2. An Intuitive AI-Human Voice Interface

The full AI voice interface combined visualized speech and responsive feedback to create a more intuitive and accessible experience. Users could also personalize aspects of the interaction to better suit their preferences.

Lastly, I built a full website with tutorials and product specifications, showcasing the voice interface and attracting a substantial group of early alpha testers.

Testing & results